As companies increasingly go digital, visual information is becoming the lifeblood of operations. From manufacturing to healthcare diagnostics, companies are spending on software that can understand and make sense of images and video. Central to this shift is Computer Vision (CV).

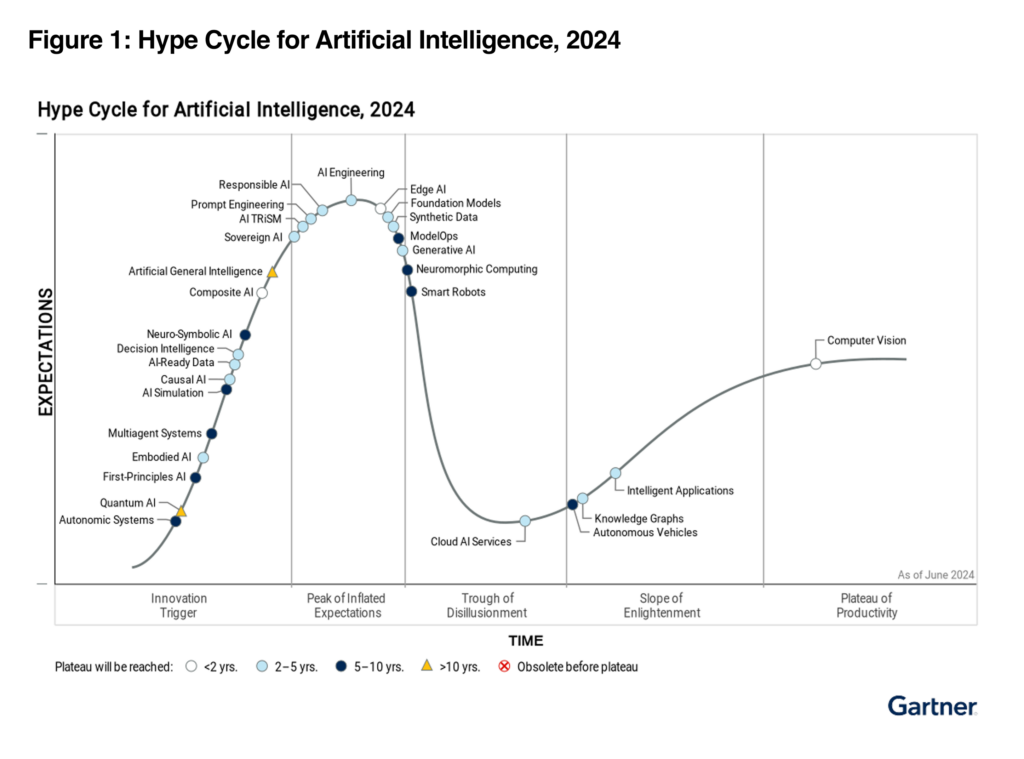

As per Gartner, “Computer vision is a collection of technologies that are used to capture, process and interpret real-world video and images in order to extract meaningful, contextual information about the physical world.” In the 2024 Gartner Hype Cycle for Artificial Intelligence, Computer Vision is now securely in the Plateau of Productivity—a phase where real-world use cases have matured, adoption is increasing steadily, and tangible ROI is visible.

Gartner describes this stage as: “The actual-world advantages of the innovation are illustrated and embraced. Tools and techniques become more stable as they move into their second and third generations. Increasing numbers of organizations are at ease with the decreased level of risk; the quick development phase of acceptance starts. About 20% of the target audience of the technology has adopted or is adopting the technology as it goes through this stage.”

Yes—you heard that correctly. One in five organizations is actively implementing or using Computer Vision. That fact alone highlights just how critical this technology has become.

Where Is Computer Vision Being Used?

Computer Vision isn’t limited to a single industry—it’s a horizontal technology. Some of the most significant applications are:

- Manufacturing: Defect detection, predictive maintenance

- Healthcare: Medical imaging, diagnostics

- Retail: Shelf monitoring, customer analytics

- Automotive: Driver assistance systems, autonomous navigation

- Security: Surveillance, facial recognition

- Agriculture: Crop monitoring, yield estimation

This widespread use proves that Computer Vision is no longer a nascent technology—it’s now a core enabler of automation, decision-making, and customer experience.

The Turning Point: Enter Generative AI

Just as organizations were beginning to fully leverage traditional Computer Vision, Generative AI (GenAI) emerged to push the boundaries even further.

We first encountered the concept of applying Generative AI to improve CV performance through AI pioneer Andrew Ng. Since then, the idea has evolved from academic research to real-world application. Generative AI vision models, powered by large pre-trained datasets and dynamic adaptability, are redefining what’s possible in visual intelligence. These models can now handle tasks like image classification, object detection, and part identification—faster, more cost-effectively, and at scale. Let’s explore a real-world example that illustrates this shift.

The Use Case: Identifying Inventory Parts from Images

Imagine an employee takes a picture of an inventory component with their phone. The goal is to extract the part number and fetch relevant information.

Approach 1: Conventional Computer Vision

We used a model based on YOLO (You Only Look Once) and LRCN (Long-term Recurrent Convolutional Networks). This approach required:

- About 800 images for 8 inventory parts (~100 images per part)

- Manual data labeling

- Preprocessing and hyperparameter tuning.

- Achieved 85–90% accuracy.

While effective, this method was time- and resource-intensive.

Approach 2: Generative AI Vision Model

Using a pre-trained GenAI model to perform the same task, the differences were immediate:

- No need for extensive manual labeling

- Minimal fine-tuning required.

- Achieved similar accuracy.

- Delivered results with lower infrastructure cost and faster deployment.

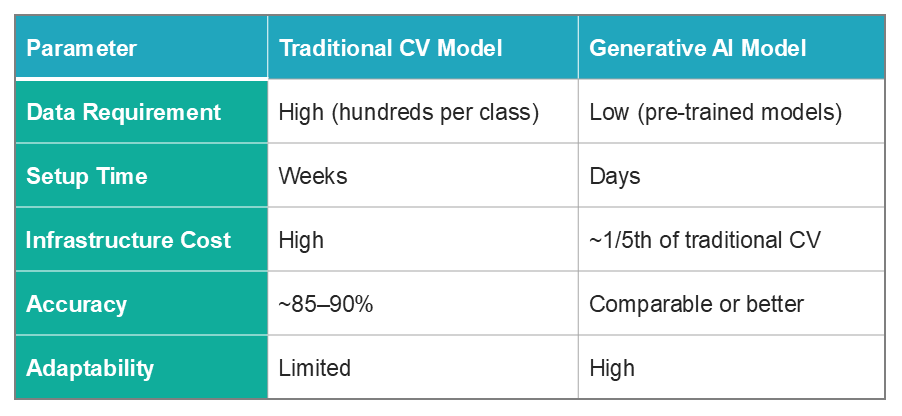

Comparative Breakdown

An Aside on Model Training: CV vs. Generative AI

Perhaps the most defining difference between traditional CV and GenAI is in how the models are trained:

- Traditional CV models (like YOLO or LRCN) require hundreds of labeled images per class, annotated data, setup time, and several retraining cycles. In our case, we needed nearly 800 images for just 8 inventory parts.

- Generative AI models leverage large foundation models trained on multi-domain datasets. They excel with few-shot or zero-shot learning, requiring little to no additional training. In our test, minimal fine-tuning delivered results on par with traditional models—with a fraction of the effort.

This difference in training methodology results in quicker experimentation, lower costs, and easier scalability.

Why Generative AI is a Game-Changer for Vision

GenAI doesn’t just replicate CV tasks—it enhances them through:

1

Web-Based Retrieval and Contextual Search

GenAI models can match inputs against massive web datasets, detecting patterns even if the object hasn’t been locally trained.

2

Synthetic Image Generation

They can create artificial training samples, enriching datasets and improving model performance.

3

Transfer Learning and Foundation Models

With multi-domain learning, GenAI models use transfer learning to generalize better, requiring fewer domain-specific inputs.

4

Reduced Resource Footprint

These models are efficient: using less compute power and less human labor, offering significant cost savings.

Things to Consider Before You Deploy

While promising, GenAI comes with caveats:

- This was a POC: Our pilot used only 8 products. Large-scale deployment requires deeper testing and edge-case handling.

- Latency: Depending on infrastructure and provider, GenAI models might show slight delays—usually short-lived.

- Governance: Like all AI tools, GenAI must adhere to privacy, explainability, and compliance standards.

Traditional CV vs. GenAI: Which Should You Choose?

- Go with Traditional CV if you have static datasets, defined environments, and object consistency.

- Choose Generative AI when speed, adaptability, and minimal data are priorities.

In many cases, a hybrid approach works best—using traditional CV where precision is needed and GenAI where flexibility is key

The Opportunity for New Adopters

If your organization has hesitated to explore Computer Vision due to costs or data barriers, Generative AI may be your gateway.

It offers:

- Faster entry

- Lower initial investment

- Quicker ROI

- Greater flexibility across industries

Together, CV and GenAI are ushering in a future where machines don’t just see—they understand, learn, and adapt.

Ready to Get Started?

At Bristlecone, we help organizations harness Generative AI to enhance visual intelligence—whether in factories, warehouses, retail stores, or diagnostics labs. From pilot to production, we’ll help you deploy vision systems that are faster, smarter, and more scalable.

Let’s connect. The future of Computer Vision is already here.

References:

- https://www.gartner.com/en/information-technology/glossary/computer-vision#:~:text=Computer%20vision%20is%20a%20set,information%20from%20the%20physical%20world.

- https://www.gartner.com/en/articles/hype-cycle-for-artificial-intelligence

- YouTube: Andrew Ng – Generative AI and Its Impact on CV

- CLIP: Connecting Vision and Language

- Stanford Lecture: Foundation Models and Transfr Learning